Do you read a lot? No?

Well, let’s say you’re at a library looking for information on a specific topic. Instead of just browsing through every book on the shelves, you ask the librarian for help.

The librarian quickly finds the most relevant books and hands them to you. This combination of your question and the librarian’s expertise helps you get the best information efficiently.

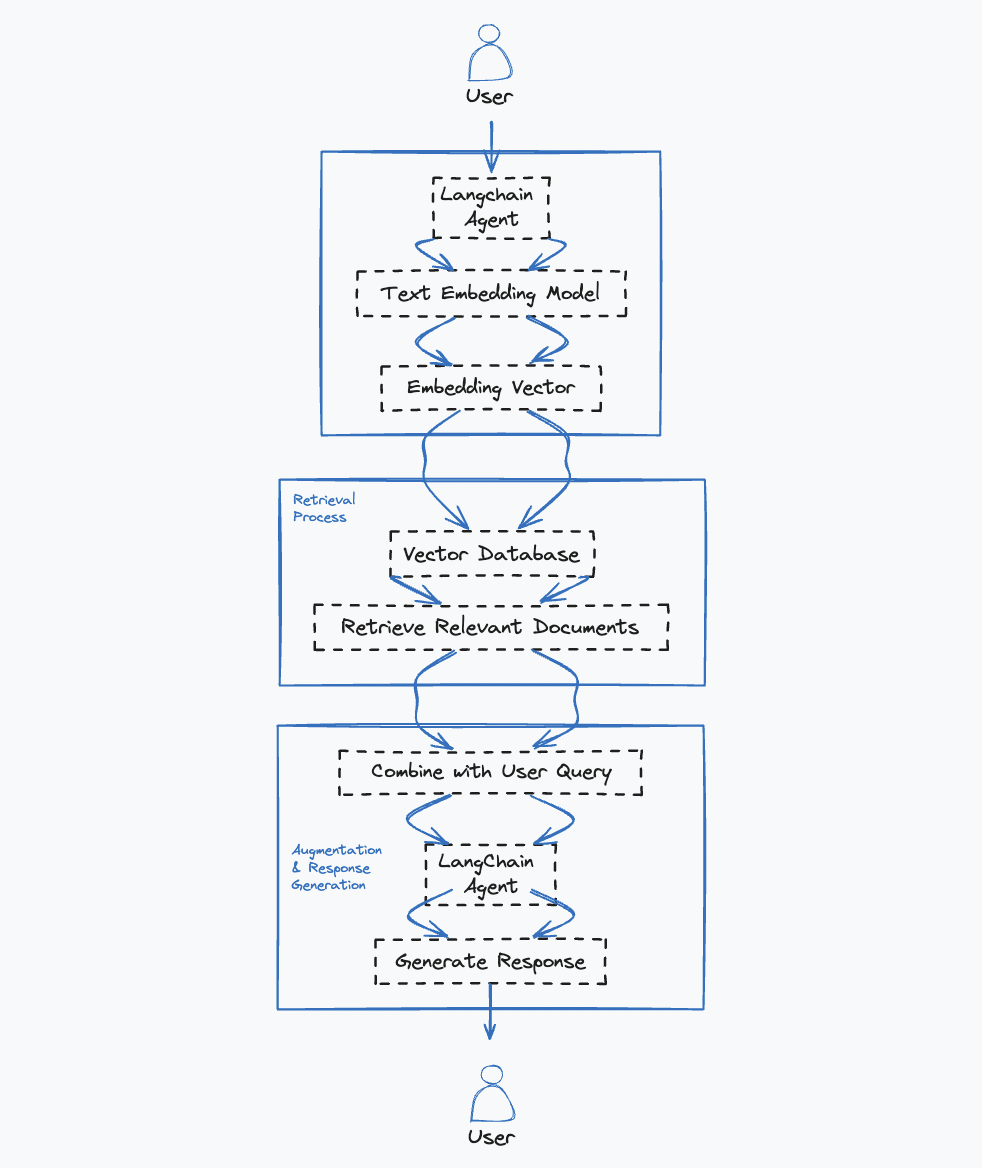

In the world of AI, we have a similar approach called Retrieval Augmented Generation (RAG). It combines retrieval (finding relevant information) with generation (creating responses) to improve the accuracy and relevance of AI outputs. We’ll explore how to implement RAG using Vertex AI and Langchain, two powerful tools in the AI ecosystem.

What is RAG?

RAG works by first retrieving relevant information from a knowledge base and then using a language model to generate a response based on that information. This helps ensure that the AI’s responses are not only accurate but also contextually rich and informative.

Why use RAG?

- Improved Accuracy: By grounding responses in real, relevant data, RAG reduces the chances of generating incorrect information.

- Contextual Relevance: Combining retrieval with generation ensures that the responses are tailored to the specific context of the query.

- Enhanced Capabilities: RAG can handle more complex queries by leveraging a large base of pre-existing information.

Getting Started with Vertex AI and Langchain

Let’s dive into a practical example of how to set up a RAG system using Vertex AI’s text-bison model and Langchain.

Step 1: Setting Up Your Environment

First, ensure you have the necessary libraries installed. You’ll need google-cloud-aiplatform for Vertex AI and langchain for managing the retrieval and generation process.

# Install necessary libraries

pip install google-cloud-aiplatform langchainStep 2: Initialize Vertex AI

Set up Vertex AI with your Google Cloud project details.

from google.cloud import aiplatform

aiplatform.init(project='your-project-id', location='your-region')Step 3: Create a Knowledge Base

For the retrieval part, you need a knowledge base. This can be a simple list of documents or more complex indexed data.

knowledge_base = [

"The capital of France is Paris.",

"The Great Wall of China is visible from space.",

"Python is a versatile programming language."

]Step 4: Define the Retrieval Function

Use Langchain to define a retrieval function that finds the most relevant information from your knowledge base.

from langchain.retrievers import SimpleRetriever

retriever = SimpleRetriever(documents=knowledge_base)

def retrieve_relevant_info(query):

return retriever.retrieve(query)Step 5: Integrate with Vertex AI’s text-bison Model

Use Vertex AI’s text-bison model to generate responses based on the retrieved information.

from google.cloud import aiplatform

def generate_response(prompt, retrieved_info):

client = aiplatform.gapic.PredictionServiceClient()

endpoint = client.endpoint_path(project='your-project-id', location='your-region', model='text-bison')

instances = [{"content": f"{retrieved_info}\n\n{prompt}"}]

response = client.predict(endpoint=endpoint, instances=instances)

return response.predictions[0]['content']

def rag_system(query):

retrieved_info = retrieve_relevant_info(query)

response = generate_response(query, retrieved_info)

return responseStep 6: Test Your RAG System

Now, let’s test the RAG system with a sample query.

query = "What is the capital of France?"

response = rag_system(query)

print(response)Wrap-Up

Retrieval Augmented Generation, powered by tools like Vertex AI and Langchain, represents a significant advancement in AI-driven text generation. By integrating information retrieval with AI text generation models, we can create more accurate, context-aware responses for a variety of applications. Whether it’s crafting personalized resumes or generating informative articles, RAG enhances both efficiency and quality in content creation.

In conclusion, the combination of Vertex AI and Langchain provides a robust framework for implementing RAG, empowering developers to build sophisticated AI applications with ease. This approach not only improves the accuracy of generated content but also enhances the overall user experience by delivering more relevant and contextually rich information.

With this setup, you are well on your way to leveraging the power of RAG to create more intelligent and useful AI solutions.